Mission

Our brain continuously processes complex visual information, constructing a comprehensive understanding of the world around us from fleeting 2D retinal impressions. At the Brains and Machines Lab at BGU, we endeavor to understand human visual cognition by employing neural network modeling and model-driven experiments. Our research utilizes a range of techniques, including psychophysics, eye-tracking, and functional magnetic resonance imaging (fMRI), to probe the mechanisms underlying high-order human vision. By leveraging deep learning, we develop computational hypotheses that could explain the observed behavioral and neural data. We then design and conduct innovative model-driven experiments to empirically test these hypotheses, closing the loop between theory and experiment.

Team

Affiliated Students

These students are co-advised by Tal Golan but are primarily based in other research groups.

Undergraduate Researchers

Current undergraduate students participating in research projects in the lab.

Alumni

Selected Publications/Preprints

* Denotes equal contribution.

Resources

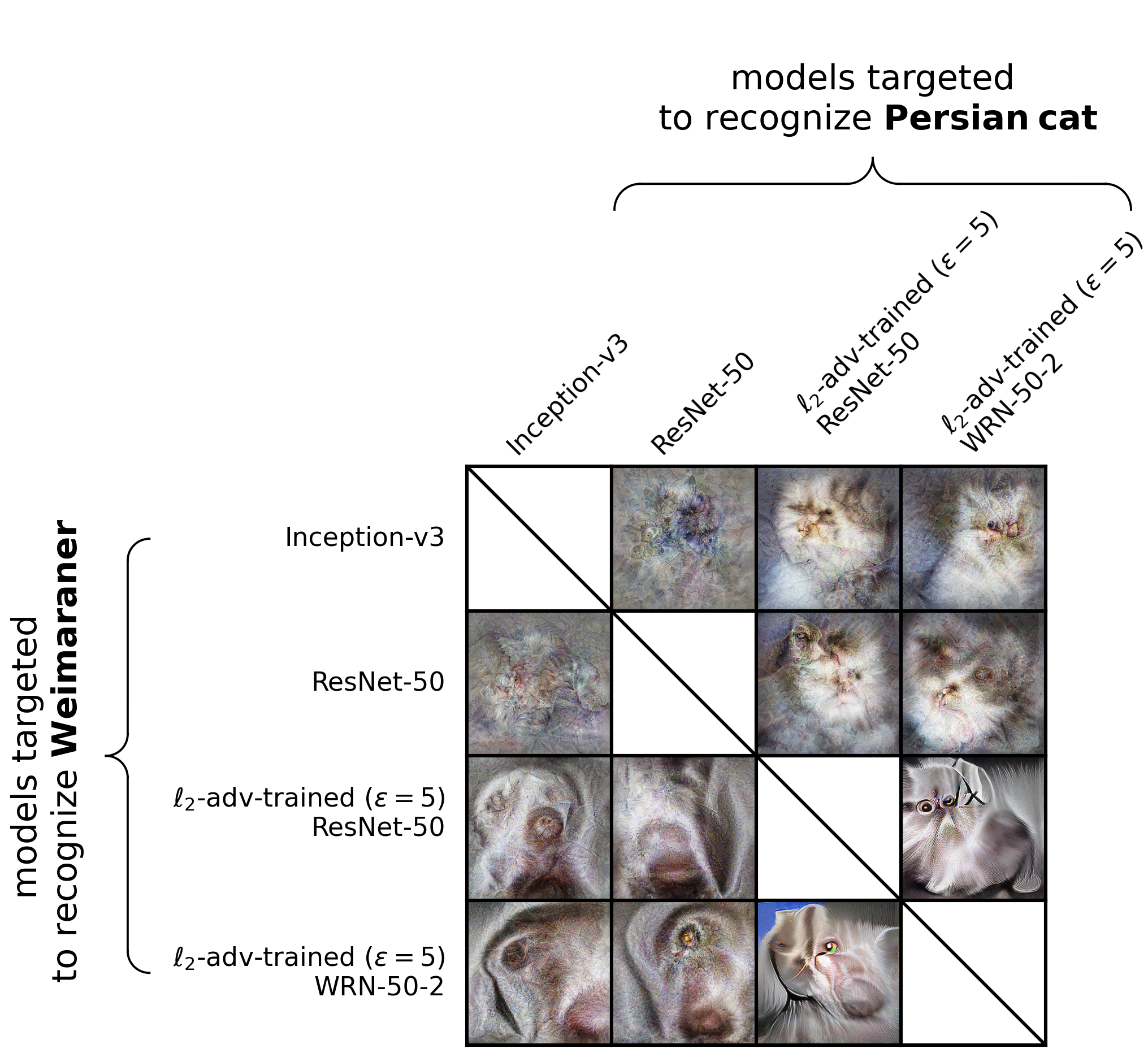

Synthesizing Controversial Stimuli (a tutorial with PyTorch)

github.com/kriegeskorte-lab/controversial_stimuli_tutorialThis is a PyTorch tutorial on synthesizing controversial stimuli to disentangle the predictions of object recognition models. This tutorial was presented at CCN 2021 (Cognitive Computational Neuroscience).

Metroplot - a compact alternative to pairwise significance brackets

github.com/brainsandmachines/metroplotNo more ugly brackets! Matplotlib-based visualization of pairwise comparisons.

Scientific Poster Design Guide

brainsandmachines.org/poster-design-guideTips for designing beautiful scientific posters by Itay Inbar.

Openings

-

- Postdoctoral opportunities: We are always looking for talented postdoctoral researchers to join our lab. We are happy to work with you on applications for fully funded positions through programs such as the Azrieli International Postdoctoral Fellowship, Marie Skłodowska-Curie Actions, or the Fulbright Postdoctoral Fellowship. We work with candidates to prepare competitive applications in advance.

- Doctoral and Master's students: The lab is currently at capacity. We will recruit new Masters/PhD students for the school year starting in October 2027.

- Undergraduate students: Recruitment for a neuroscience project starting in August 2026 will open in March 2026.

If you are interested in joining the lab, please send your CV, current grade sheets, and a brief statement of research interests to golan.neuro@bgu.ac.il.

Contact

Email: golan.neuro@bgu.ac.il

Lab: Building 90, Room 4

PI Office: Building 93B, Room 4

Address: Department of Industrial Engineering and Management, Ben-Gurion University of the Negev, David Ben Gurion Blvd 1, Be'er Sheva, Israel